You may be familiar with the Gartner Hype Cycle, if only for the humorously cynical and philosophical names of the various stages, such as “the peak of inflated expectations”, “the trough of disillusionment”, and “the slope of enlightenment”, names that sound more like chapters in a new Tony Robbins book than those of a technology classification chart.

The Hype Cycle is now over 20 years old. Given this time frame largely covers the entire rise of digital marketing, taking a little wander down innovation history lane is rather informative. It turns out not so many technologies have progressed smoothly along the adoption journey.

Aside from some now outdated language, the first Hype Cycle from 1995 actually looks like a pretty good prediction of tech adoption. Emergent Computation is, apparently, a forefather to neural network-based machine learning, so whilst the terminology might seem unfamiliar, the technology is still very relevant in 2018 in areas such as machine learning.

But looking a little more closely at the many Hype Cycles since 1995, it’s clear over the years that there have been more than a few technologies that turned out to be far more hype than help and ended up slipping right off the cycle – truth verification (2004), 3D TV (2010), social TV (from 2011), Volumetric and holographic displays (2012), to name but a few.

As Michael Mullany says in his article about this subject from December 2016, the tech industry (like most industries) is not very good at making predictions and also not good at looking backwards after the fact to see what it got right and what it didn’t.

This is no slight against the tech industry, almost all industries fall into this type of thinking – something Nasim Taleb pointed out some years ago in his book The Black Swan. For reasons of cognitive efficiency humans are rather lazy thinkers, so we tend to remember the easy to recall successful predictions and conveniently, mostly subconsciously, we forget the hard to recall failures. This, of course, creates a terribly inaccurate feedback loop.

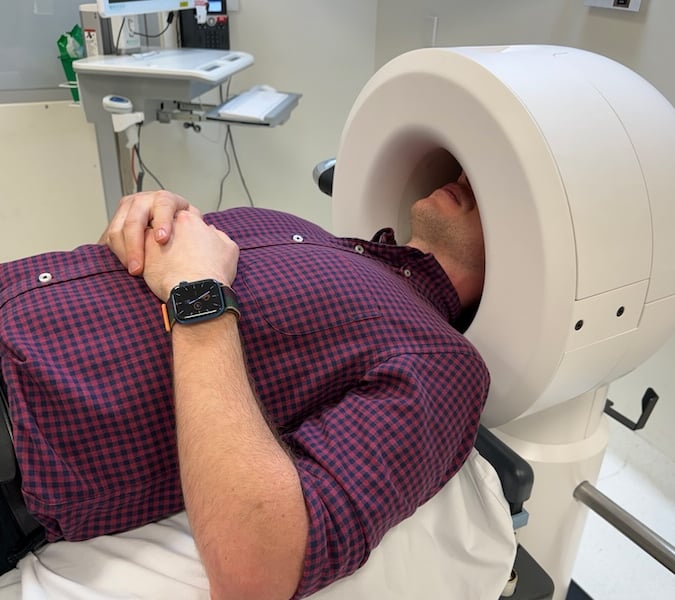

Mullany also goes on to mention a couple of other reasons why there are so few technologies that flow nicely through the technology adoption cycle; many technologies are simply flashes in the innovation pan so to speak (like the ones mentioned above, although truth verification sounds like it may have caught on if it was launched now) and a good few other technologies are constant presences in the Hype Cycle because their mainstream adoption continues to get further into the future each year rather than closer e.g. quantum computing and brain/machine interfaces.

Obviously, the world of marketing and advertising is becoming increasingly more technology-based. If all the experts at Gartner keep making considerable errors in their predictions about technology and its uptake, what hope does our industry have with a lot less technological expertise at our fingertips?

Well, it turns out that there is good reason to be hopeful; sometimes knowing less than the leading experts can actually be a good thing, as long as it’s not too much less. This sweet spot of knowledge creates just the right amount of doubt in a point of view, which in turn helps create more open-mindedness to possible future outcomes and thereby results in better predictions than the aforementioned experts.

It’s based on the Dunning-Kruger Effect, which is more commonly used to explain why people with limited talent manage to be so overconfident i.e. people at Karaoke who think they can sing like rock stars but sound tone deaf. It’s essentially an ignorance bias whereby people with limited knowledge don’t know enough to know what they don’t know. It’s commonly represented by the neighbouring graph.

Apart from the initial burst of confidence from the ignorant, which notably is at a level even an expert fails to ever attain, the chart makes intuitive sense, as we learn more we become less confident (know what we don’t know) until we approach expert level and become increasingly confident (know that we know).

However, whilst confidence amongst experts is clearly more desirable than confidence amongst idiots, it still remains problematic, an area Berkeley psychologist Philip Tetlock studied extensively in the early 2000s.

He recruited 284 people who made their living providing expert predictions in the areas of politics and economics, i.e. human behavior, and had them answer various questions along the lines of ‘Will Canada break up?’ or ‘Will the US go to war in the Persian Gulf ?’ etc. In all, he collected over 82,000 expert predictions.

His results are in some respects the inverse shape of the Dunning-Kruger chart. Knowing something about a subject definitely improves the reliability of a prediction, however, beyond a certain point knowing more seems to make predictions less reliable.

To quote Tetlock himself “we reach the point of diminishing marginal predictive returns for knowledge disconcertingly quickly, in this age of hyperspecialisation there is no reason for supposing that contributors to top academic journals – distinguished political scientists, area study specialists, economists and so on – are any better than journalists or attentive readers of respected publications, such as the New York Times, in ‘reading’ emerging situations”. He also concluded that in many situations, the more famous or expert the person doing the predicting was, the less accurate the prediction.

The key issue being the expert’s knowledge and expertise combined with their high level of confidence prevents them from entertaining less likely but still highly possible outcomes. Their expertise leads them to become somewhat close-minded; they drink their own Kool Aid so to speak. Whereas the merely knowledgeable, who are less confident in themselves and their predictions, are far more likely to assess alternative outcomes, thus making their viewpoints and predictions more accurate.

This position of knowing a reasonable amount is the natural place for agencies. Our ‘expertise’ is more an accumulation of many areas, none of which we are specifically expert in. We know a fair bit about human behaviour, a fair bit about marketing, a fair bit about technology, a fair bit about media channels and popular culture and a fair bit about our clients’ businesses. We know less about each individual area than a single discipline expert or global authority but our ‘expertise’ is in blending our working knowledge of each area together. In today’s world this positioning is invaluable and not one easily copied by a tech firm, a consulting firm or by clients themselves – they’re all deep experts in their own fields making them less open-minded toward non-typical outcomes or new ideas and innovations.

However, this is perhaps a position we have not always employed as well as we could. Whilst Gartner has clearly over-hyped more than a few technologies, the world of marketing and advertising has also been responsible for a number of unnecessary websites, (my personal favourite is still bidforsurgery.com) apps and VR/AR games.

That isn’t to say there haven’t been some fantastic applications of these technologies, more that we haven’t always responsibly applied our ‘reasonably knowledgeable’ positioning. This sweet spot of open-mindedness and knowledge should allow us to be better at putting new technology into context than tech companies whose experts typically place too much importance on their own area of specialty. But to maximise our position we need to stop using technology because it’s new or because it makes us appear innovative.

Our most current industry obsession seems to be AI, which incidentally Gartner currently has at the top of the peak of inflated expectation. Many of the headlines talk about AI taking jobs, killing brands, taking over marketing, making ads and getting smarter than us and becoming existentially dangerous. To be fair, it is doing some amazing things both in terms of marketing and other areas of life, real-time language translation and cancer spotting to name just two (it’s also obviously worthwhile thinking about avoiding being wiped out by our own inventions). However, to avoid misapplying new technology in some of the ways we have in the past we must balance the above headlines with some less dramatic points of view such as; “It would be more helpful to describe the developments of the past few years as having occurred in ‘computational statistics’ rather than in AI” – Patrick Winston, professor of AI and computer science at MIT.

“Neural nets are just thoughtless fuzzy pattern recognisers, and as useful as fuzzy pattern recognisers can be” – Geoffrey Hinton, cognitive psychologist and computer scientist Google / University of Toronto.

“The claims that we will go from one million grounds and maintenance workers in the U.S. to only 50,000 in 10 to 20 years, because robots will take over those jobs are ludicrous. How many robots are currently operational in those jobs? Zero. How many realistic demonstrations have there been of robots working in this arena? Zero.” – Rodney Brooks Australian roboticist, Fellow of the Australian Academy of Science and former Panasonic Professor of Robotics at MIT.

None of this is to suggest that we should stop using AI or employing the latest technologies, just that to exploit the ‘reasonably knowledgeable’ position we must be balanced in our assessment of new innovations.

The open-mindedness and objectivity of sitting in the middle of the idiots and experts is a place that is only becoming ever more valuable as the world gets more complex and filled with more brilliant, but close-minded, experts. The great thing for us is that it’s not a position that is easily copied and if we exploit it well it should help us compete against consulting firms, client ‘in-housing’ and some areas of automation.

And at the very least it should help us avoid sounding ludicrous, something that is going to be rather tricky when we start talking about smart dust, the most recent entrant in the latest Gartner Hype Cycle.

-

Simon Bird is PHD’s strategy director.

-

This story originally appeared on StopPress.