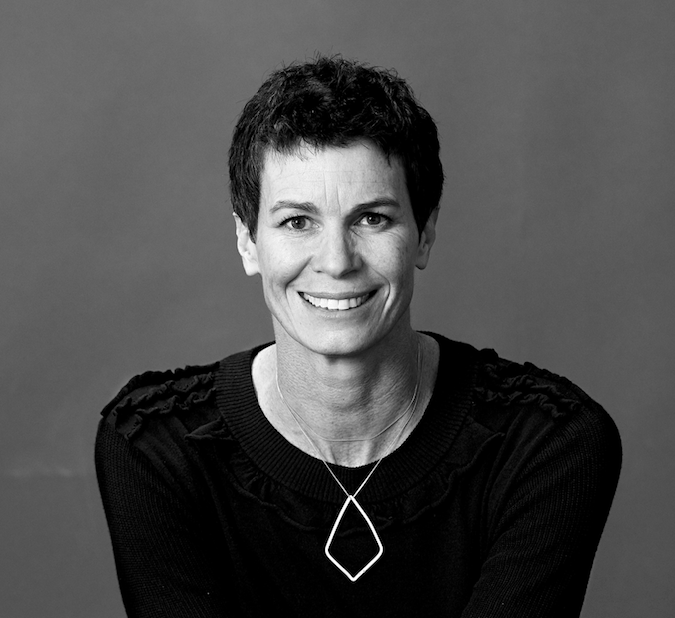

Microsoft’s global AI leader on tackling sexism and racism: “The beautiful thing about AI is it’s a set of tools to make all of us more intelligent”

While it was once an alien concept, AI is now infiltrating many aspects of our lives, from autonomous cars, to smart homes and objects, to translation apps, to automated assistants that answer a person’s every beck and call.

By the year 2025, it’s predicted that the AI software will reach over US$59 billion revenue, while it’s predicted that by 2018, more than 3 million workers globally will be supervised by a “robo-boss.”

But one aspect of AI that’s increasingly becoming a topic of discussion is the cultural and social effects of it, now that governments, companies and society are grappling with the consequences of the new technology.

If robots have been designed by humans to mimic humans, then how can we prevent unconscious bias and darker elements of humanity, such as racism, sexism and homophobia, arising in technology?

As recent events prove, sometimes the invention of such a technology can be like holding up a mirror and seeing an uncomfortable reflection of what the human race is like staring back.

Take the Twitter chatbot Microsoft created last year called TayTweets, which was targeted at 18 to 24-year-olds in the US and could learn and develop talking points as it interacted with users on the platform.

However, things took an ugly turn when Tay pledged alliance to Hitler and began repeating alt-right slogans, like calling the 9/11 attacks an inside job.

The account was eventually silenced, but the experiment demonstrated that robots can become as sexist, racist and prejudiced as humans can be, if humans are who they’re acquiring their knowledge from.

Which begs the question, is tech moving at such a frenetic pace that society isn’t quite developed enough to keep up with it? Should we be hitting the pause button and attempting to solve these societal problems of sexism and racism before we delve into this futuristic technology?

Microsoft global AI leader David Heiner doesn’t think so. He says issues like sexism and racism are huge challenges, but the good thing about AI is it can unearth biases that may not have necessarily been discovered before, while also having the ability to crunch data to find the best way to tackle it.

“The beautiful thing about AI is it’s a set of tools to make all of us more intelligent, to the extent that we’re trying to apply intelligence to address things like sexism and racism. AI tools can help us do that, as it can analyse when society is allocating resources in a way that isn’t fair and what’s the best way to address it.”

Heiner is also confident that despite the hiccups encountered along the way, there are several ways to prevent unconscious bias creeping into technology.

Analytical techniques are being developed that can help assess when bias is in a particular data set, he says, while if there’s a data set that reflects society as a whole, that’s where the work of the academic community becomes key.

Microsoft has recently teamed up with representatives from Google, Cloudfare and several US universities to create FATML (Fairness Accountability Transparency Machine Learning), an organisation that works with academics to figure out how bias creeps into technology and how to get rid of it.

The other initiative tackling this problem is the Partnership on AI group, which Microsoft co-founded alongside fellow tech behemoths Google and IBM. It includes representatives from organisations like Facebook and Amazon, while Apple’s Tom Gruber, the chief technology officer of AI personal assistant Siri, joined the ranks this year.

“The idea is that across the industry, we bring people together who deep thinkers on these subjects like privacy, transparency and ethics, and see if we can come to some consensus, exchange ideas and develop guidelines we can deploy, get experience with and get better at,” Heiner says.

He says another way to prevent AI bias is to attract to more diversity to the profession, as it’s famously not diverse.

“If the systems are only being built by young, white men, the biases are likely to come through a lot higher.”

But with all this said and done – and the examples of where AI has gone astray and reflected the worst of humanity – he says an important point to consider is that AI is capable of accomplishing a lot of social good, too.

“Our sense is that AI is going to be wonderful. It is a form of intelligence and it’s unfortunate that it’s called artificial intelligence – it was named in the 50s, when the term artificial didn’t have negative connotations – when really it’s computational intelligence that’s all created by people,” he says.

“If people don’t trust it, they’re less likely to deploy it or share data about themselves, and without data about people, an AI system can’t make decisions. It’s imperative there’s an ethical framework in place so people trust it.”

He says the technology industry needs to commit to building AI in a way that’s secure, reliable and can’t be tampered with, while helping benefit humanity instead of hindering it.

‘The high-level vision is that we want to build system where the goal is to augment human capability. We don’t want to replace somebody, we want to teach machine to do amazing things to help people, it becomes like a tool, like how the radiologist gets better with the system.”